Huawei Invests $1.5B in Developers, Teases AI Cloud

Huawei plans to invest $1.5 billion in developer tools and teased a bevy of AI cloud services and...

Huawei plans to invest $1.5 billion in developer tools and teased a bevy of AI cloud services and...

If you are deploying an enterprise QoS scheme, wireless QoS matters to you. On today's episode, we go through the basics of wireless QoS, covering some of the standards, terminology, and thinking required to get your head around how we can prioritize packets over a shared medium. Our guest is Ryan Adzima.

The post Heavy Networking 472: Grappling With Wireless QoS appeared first on Packet Pushers.

In 2019, CISOs struggle more than ever to contain and counter cyberattacks despite an apparently flourishing IT security market and hundreds of millions of dollars in venture capital fueling yearly waves of new startups. Why?

If you review the IT security landscape today, you’ll find it crowded with startups and mainstream vendors offering solutions against cybersecurity threats that have fundamentally remained unchanged for the last two decades. Yes, a small minority of those solutions focus on protecting new infrastructures and platforms (like container-based ones) and new application architecture (like serverless computing), but for the most part, the threats and attack methods against these targets have remained largely the same as in the past.

This crowded market, propelled by increasing venture capital investments, is challenging to assess, and can make it difficult for a CISO to identify and select the best possible solution to protect an enterprise IT environment. On top of this, none of the solutions on the market solve all security problems, and so the average security portfolio of a large end user organization can often comprise of dozens of products, sometimes up to 50 different vendors and overlap in multiple areas.

Despite the choices, and more than Continue reading

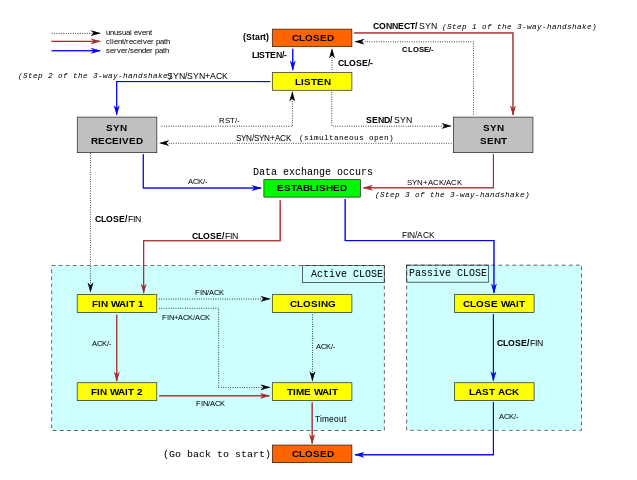

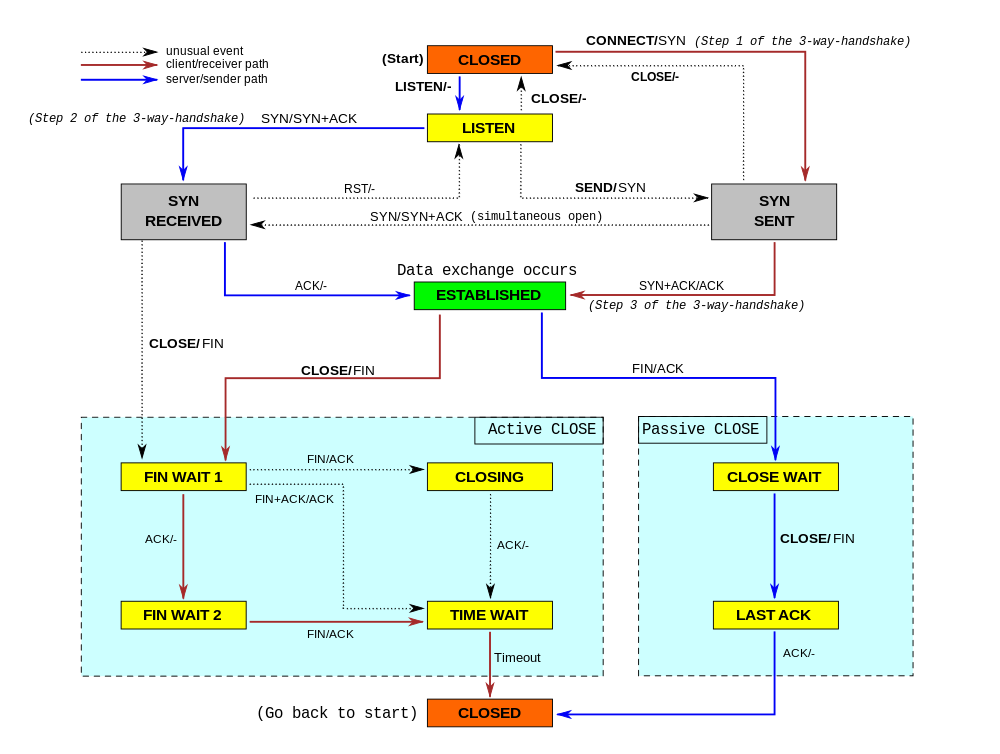

While working on our Spectrum server, we noticed something weird: the TCP sockets which we thought should have been closed were lingering around. We realized we don't really understand when TCP sockets are supposed to time out!

In our code, we wanted to make sure we don't hold connections to dead hosts. In our early code we naively thought enabling TCP keepalives would be enough... but it isn't. It turns out a fairly modern TCP_USER_TIMEOUT socket option is equally as important. Furthermore it interacts with TCP keepalives in subtle ways. Many people are confused by this.

In this blog post, we'll try to show how these options work. We'll show how a TCP socket can timeout during various stages of its lifetime, and how TCP keepalives and user timeout influence that. To better illustrate the internals of TCP connections, we'll mix the outputs of the tcpdump and the ss -o commands. This nicely shows the transmitted packets and the changing parameters of the TCP connections.

Let's start from the simplest case - what happens when one attempts to establish a connection to a server which discards inbound SYN packets?

$ Continue reading

Wake up! It's HighScalability time:

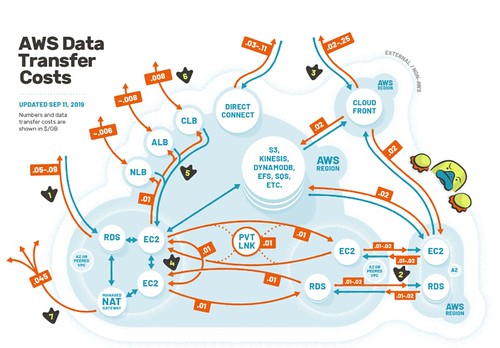

What could be simpler? (duckbillgroup)

Do you like this sort of Stuff? I'd love your support on Patreon. I wrote Explain the Cloud Like I'm 10 for people who need to understand the cloud. And who doesn't these days? On Amazon it has 54 mostly 5 star reviews (125 on Goodreads). They'll learn a lot and likely add you to their will.

Don't miss all that the Internet has to say on Scalability, click below and become eventually consistent with all scalability knowledge (which means this post has many more items to read so please keep on reading)...

Weekly Wrap for Sept. 20, 2019: Kubernetes is central to the VMware-IBM rivalry; Cloudflare's IPO...

Docker support for cross-platform applications is better than ever. At this month’s Docker Virtual Meetup, we featured Docker Architect Elton Stoneman showing how to build and run truly cross-platform apps using Docker’s buildx functionality.

With Docker Desktop, you can now describe all the compilation and packaging steps for your app in a single Dockerfile, and use it to build an image that will run on Linux, Windows, Intel and Arm – 32-bit and 64-bit. In the video, Elton covers the Docker runtime and its understanding of OS and CPU architecture, together with the concept of multi-architecture images and manifests.

The key takeaways from the meetup on using buildx:

Not a Docker Desktop user? Jason Andrews, a Solutions Director at Arm, posted this great article on how to setup buildx using Docker Community Engine on Linux.

Check out the full meetup on Docker’s YouTube Channel:

You can also access the demo repo here. The sample code for this meetup is from Elton’s latest book, Learn Docker in a Month of Lunches, an accessible task-focused Continue reading

Terry Slattery has a distinguished career in networking and is well known for his contributions to the Cisco CLI, being the second person to obtain the CCIE, providing consultation to many organizations, and the list goes on. If it’s happened in networking, there’s a very good chance that Terry has experience in it. Today Terry joins us to to talk about how he got started into networking and how he’s navigated a very successful career in networking.

The post Community Spotlight – Terry Slattery appeared first on Network Collective.

How many times have your users come to your office and told you the wireless was down? Or maybe you get a phone call or a text message sent from their phone. If there’s a way for people to figure out that the wireless isn’t working they will not hesitate to tell you about it. But is it always the wireless?

During CWNP Wi-Fi Trek 2019, Keith Parsons (@KeithRParsons) gave a great talk about Tips, Techniques, and Tools for Troubleshooting Wireless LAN. It went into a lot of detail about how many things you have to look at when you start troubleshooting wireless issues. It makes your head spin when you try and figure out exactly where the issues all lie.

However, I did have to put up a point that I didn’t necessarily agree with Keith on:

A few months ago Juniper announced that the JNCIE-ENT lab exam would be getting a much-needed refresh. On November 1st …

The post Juniper JNCIE-ENT Refresh appeared first on Fryguy's Blog.

Another free and open monospaced font for code development this time from Microsoft. A key differentiator is the inclusion of ligatures for programming symbols (see below). Ligature support is rare among text editors and very rare for TTF encoded fonts. Its more common to see OTF ligatures supported. Also, no italics support yet. Creating fonts […]

The post Cascadia Code | Windows Command Line Tools For Developers appeared first on EtherealMind.

Network engineers for the last twenty years have created networks from composable logical constructs, which result in a network of some structure. We call these constructs “OSPF” and “MPLS”, but they all inter-work to some degree to give us a desired outcome. Network vendors have contributed to this composability and network engineers have come to expect it by default. It is absolute power from both a design and an implementation perspective, but it’s also opinionated. For instance, spanning-tree has node level opinions on how it should participate in a spanning-tree and thus how a spanning-tree forms, but it might not be the one you desire without some tweaks to the tie-breaker conditions for the root bridge persona.

Moving to the automated world primarily means carrying your existing understanding forward, adding a sprinkle of APIs to gain access to those features programmatically and then running a workflow, task or business process engine to compose a graph of those features to build your desired networks in a deterministic way.

This is where things get interesting in my opinion. Take Cisco’s ACI platform. It’s closed and proprietary in the sense of you can’t change the way it works internally. You’re lumped with a Continue reading

Privacy statements are both a point of contact to inform users about their data and a way to show governments the organization is committed to following regulations. On September 17, the Internet Society’s Online Trust Alliance (OTA) released “Are Organizations Ready for New Privacy Regulations?“ The report, using data collected from the 2018 Online Trust Audit, analyzes the privacy statements of 1,200 organizations using 29 variables and then maps them to overarching principles from three privacy laws around the world: General Data Protection Regulation (GDPR) in the European Union, California Consumer Privacy Act (CCPA) in the United States, and Personal Information Protection and Electronics Document Act (PIPEDA) in Canada.

In many cases, organizations lack key concepts covering data sharing in their statements. Just 1% of organizations in our Audit disclose the types of third parties they share data with. This is a common requirement across privacy legislation. It is not as onerous as having to list all of the organizations; simply listing broad categories like “payment vendors” would suffice.

Data retention is another area where many organizations are lacking. Just 2% had language about how long and why they would retain data. Many organizations have Continue reading

After identifying some of the challenges every network solution must address (part 1, part 2, part 3) we tried to tackle an interesting question: “how do you implement this whole spaghetti mess in a somewhat-reliable and structured way?”

The Roman Empire had an answer more than 2000 years ago: divide-and-conquer (aka “eating the elephant one bite at a time”). These days we call it layering and abstractions.

In the Need for Network Layers video I listed all the challenges we have to address, and then described how you could group them in meaningful modules (called networking layers).

You need free ipSpace.net subscription to watch the video, or a paid ipSpace.net subscriptions to watch the whole webinar.

We’ve been covering papers from VLDB 2019 for the last three weeks, and next week it will be time to mix things up again. There were so many interesting papers at the conference this year though that I haven’t been able to cover nearly as many as I would like. So today’s post is a short summary of things that caught my eye that I haven’t covered so far. A few of these might make it onto The Morning Paper in weeks to come, you never know!

On the heels of our recent update on image tag details, the Docker Hub team is excited to share the availability of personal access tokens (PATs) as an alternative way to authenticate into Docker Hub.

Already available as part of Docker Trusted Registry, personal access tokens can now be used as a substitute for your password in Docker Hub, especially for integrating your Hub account with other tools. You’ll be able to leverage these tokens for authenticating your Hub account from the Docker CLI – either from Docker Desktop or Docker Engine:

docker login --username <username>

When you’re prompted for a password, enter your token instead.

The advantage of using tokens is the ability to create and manage multiple tokens at once so you can generate different tokens for each integration – and revoke them independently at any time.

Personal access tokens are created and managed in your Account Settings.

From here, you can:

Note that the actual token is only shown once, at the time Continue reading