Network Break 445: Juniper Pairs With ChatGPT, Microsoft To Unpair Teams In The EU

Today on Network Break we discuss Juniper integrating ChatGPT with its AI digital assistant, Microsoft's plan to unbundle Teams in the EU to fend off regulators, financial results from soon-to-be-paired Broadcom and VMware, a 5G follow-up, and more.Network Break 445: Juniper Pairs With ChatGPT, Microsoft To Unpair Teams In The EU

Today on Network Break we discuss Juniper integrating ChatGPT with its AI digital assistant, Microsoft's plan to unbundle Teams in the EU to fend off regulators, financial results from soon-to-be-paired Broadcom and VMware, a 5G follow-up, and more.

The post Network Break 445: Juniper Pairs With ChatGPT, Microsoft To Unpair Teams In The EU appeared first on Packet Pushers.

VMware Takes a Full-Stack Approach in Addressing the Retail Edge

A full-stack approach to retail edge offers retailers a way to optimize operations and adapt to changes in a post-pandemic world.Random Thoughts on Zero-Trust Architecture

When preparing the materials for the Design Clinic section describing Zero-Trust Network Architecture, I wondered whether I was missing something crucial. After all, I couldn’t find anything new when reading the NIST documents – we’ve seen all they’re describing 30 years ago (remember Kerberos?).

In late August I dropped by the fantastic Roundtable and Barbecue event organized by Gabi Gerber (running Security Interest Group Switzerland) and used the opportunity to join the Zero Trust Architecture roundtable. Most other participants were seasoned IT security professionals with a level of skepticism approaching mine. When I mentioned I failed to see anything new in the now-overhyped topic, they quickly expressed similar doubts.

Random Thoughts on Zero-Trust Architecture

When preparing the materials for the Design Clinic section describing Zero-Trust Network Architecture, I wondered whether I was missing something crucial. After all, I couldn’t find anything new when reading the NIST documents – we’ve seen all they’re describing 30 years ago (remember Kerberos?).

In late August I dropped by the fantastic Roundtable and Barbecue event organized by Gabi Gerber (running Security Interest Group Switzerland) and used the opportunity to join the Zero Trust Architecture roundtable. Most other participants were seasoned IT security professionals with a level of skepticism approaching mine. When I mentioned I failed to see anything new in the now-overhyped topic, they quickly expressed similar doubts.

On Infrastructure as Code and Bit Rot

The architecture of the infrastructure-as-code (IaC) tooling you use will determine the level to which your IaC definitions are exposed to bit rot.

This is a maxim I have arrived at after working with multiple IaC tool sets, both professionally and personally, over the last few years. In this blog post, I will explain how I arrived at this maxim by describing three architectural patterns for IaC tools, each with differing levels of risk for bit rot.

Is It Time to Clean Up Your Network Management Tool Bench?

Network management tool sprawl is getting in the way of network management. It’s time for IT to do something about it.Connection coalescing with ORIGIN Frames: fewer DNS queries, fewer connections

This blog reports and summarizes the contents of a Cloudflare research paper which appeared at the ACM Internet Measurement Conference, that measures and prototypes connection coalescing with ORIGIN Frames.

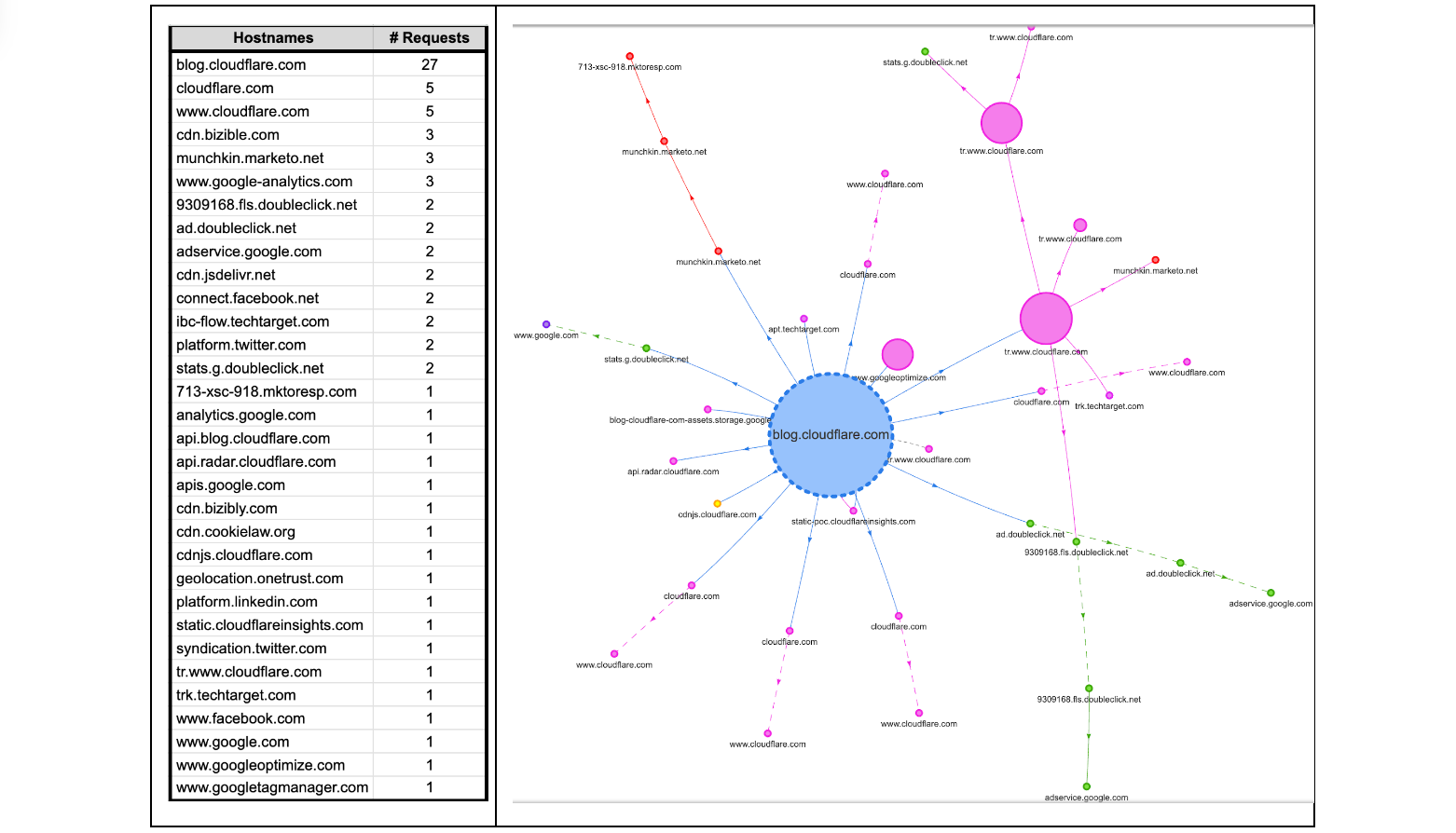

Some readers might be surprised to hear that a single visit to a web page can cause a browser to make tens, sometimes even hundreds, of web connections. Take this very blog as an example. If it is your first visit to the Cloudflare blog, or it has been a while since your last visit, your browser will make multiple connections to render the page. The browser will make DNS queries to find IP addresses corresponding to blog.cloudflare.com and then subsequent requests to retrieve any necessary subresources on the web page needed to successfully render the complete page. How many? Looking below, at the time of writing, there are 32 different hostnames used to load the Cloudflare Blog. That means 32 DNS queries and at least 32 TCP (or QUIC) connections, unless the client is able to reuse (or coalesce) some of those connections.

Each new web connection not only introduces additional load on a server's processing capabilities – potentially leading to scalability challenges during peak usage hours Continue reading