Who Pays The Price of Redundancy?

No doubt by now you’ve seen the big fire that took out a portion of the OVHcloud data center earlier this week. These kinds of things are difficult to deal with on a good day. This is why data centers have reductant power feeds, fire suppression systems, and the ability to get back up to full capacity. Modern data centers are getting very good at ensuring they can stay up through most events that could impact an on-premises private data center.

One of the issues I saw that was ancillary to the OVHcloud outage was the small group of people that were frustrated that their systems went down when the fire knocked out the racks where their instances lived. More than a couple of comments mentioned that clouds should not go down like this or asked about credit for time spent being offline or some form of complaints about unavailability. By and large, most of those complaining were running non-critical systems or were using the cheapest possible instances for their hosts.

Aside from the myopia that “cloud shouldn’t go down”, how do we deal with this idea that cloud redundancy doesn’t always translate to single instance availability? I think we Continue reading

Heavy Networking 566: Inside Intel’s Strategy To Unlock Data Center Performance (Sponsored)

On today's Heavy Networking we dive into Intel's portfolio---including Tofino, SmartNICs, P4, and more---to understand how it unlocks the compute power of your data center. Our guest is Mike Zeile, Data Center Group Vice President and General Manager of End-to-End Network Applications at Intel. Intel is our sponsor.Heavy Networking 566: Inside Intel’s Strategy To Unlock Data Center Performance (Sponsored)

On today's Heavy Networking we dive into Intel's portfolio---including Tofino, SmartNICs, P4, and more---to understand how it unlocks the compute power of your data center. Our guest is Mike Zeile, Data Center Group Vice President and General Manager of End-to-End Network Applications at Intel. Intel is our sponsor.

The post Heavy Networking 566: Inside Intel’s Strategy To Unlock Data Center Performance (Sponsored) appeared first on Packet Pushers.

Technology Short Take 138

Welcome to Technology Short Take #138. I have what I hope is an interesting and useful set of links to share with everyone this time around. I didn’t do so well on storage links; apologies to my storage-focused friends! However, there should be something for most everyone else. Enjoy!

Networking

- I’ve been interested in learning more about gRPC, so this guide on analyzing gRPC messages using Wireshark may be useful.

- Isovalent, the folks behind Cilium, recently unveiled the Network Policy Editor, a graphical way of editing Kubernetes Network Policies.

- Ivan Pepelnjak, the font of all networking knowledge, has been discussing cloud networking in some detail for a good while now. The latest series of posts (found here and here) are, in my opinion, just outstanding. I want to be like Ivan when I grow up.

#BeLikeIvan - If you work with TextFSM templates (see here for more information), then you might also like this post on writing a

vimsyntax plugin for TextFSM templates. - Want/need to better understand IPv6? Denise Fishburne has you covered. Denise also has you covered if you need BGP knowledge.

Security

- The Google Project Zero blog takes a look at iMessage in iOS 14.

- I recently Continue reading

In Macedonia, Strengthening IXP.mk’s Peering Infrastructure

The Internet Society has been supporting the development of the Internet in Macedonia by collaborating with the Faculty of Computer Science and Engineering (FCSE) of the Saints Cyril and Methodius University in Macedonia on its IXP.mk project. IXPs play a critical role in bringing faster and more affordable Internet, and the Macedonian IXP (IXP.mk) had been established in June 2018 with technical support from a number of stakeholders.

Switch and MUX to Strengthen Infrastructure

As traffic grew, IXP.mk needed to increase its peering capacity with improved switching capabilities and space for data racks that would allow it to attract new participants to the exchange. In 2020, the Internet Society provided IXP.mk with a switch and two Fiber Optic Multiplexers (Fiber Mux) that enabled an additional peering location to be established in the Telesmart Telekom data center, thereby making it easier for other major networks and Content Distribution Networks to peer with each other. The Fiber Muxes support the transmission of multiple data channels over a single fiber that has been donated by an existing member of IXP.mk.

Critical Service Provider

With a strong peering infrastructure, IXP.mk is now a critical service provider to Continue reading

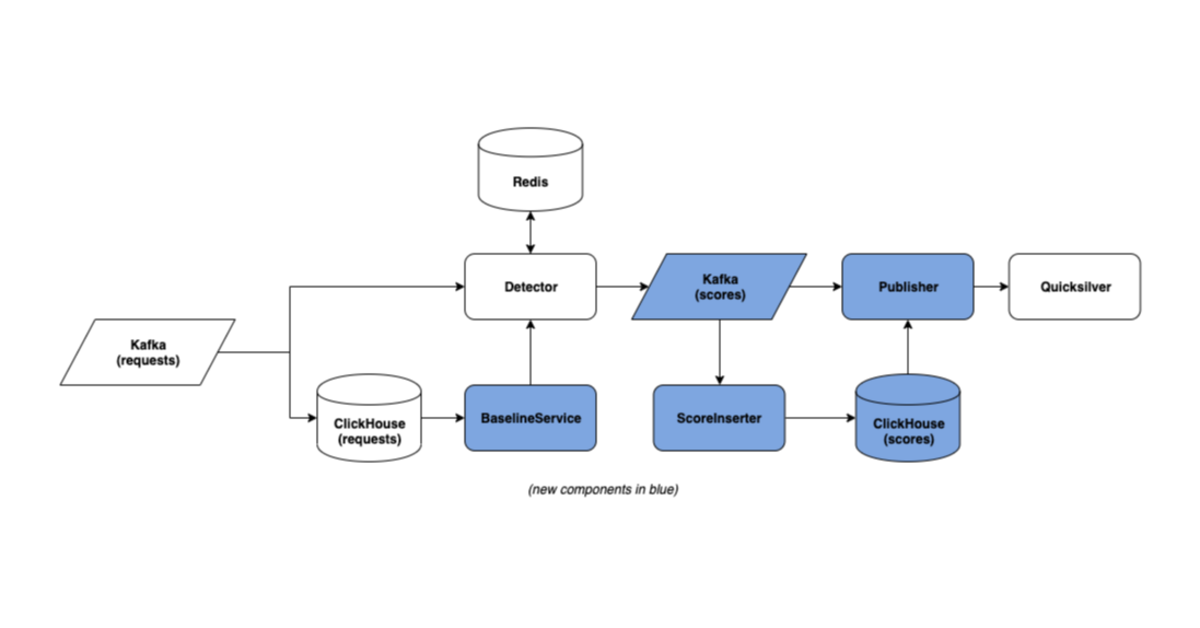

Lessons Learned from Scaling Up Cloudflare’s Anomaly Detection Platform

Introduction to Anomaly Detection for Bot Management

Cloudflare’s Bot Management platform follows a “defense in depth” model. Although each layer of Bot Management has its own strengths and weaknesses, the combination of many different detection systems — including Machine Learning, rule-based heuristics, JavaScript challenges, and more — makes for a robust platform in which different detection systems compensate for each other’s weaknesses.

One of these systems is Anomaly Detection, a platform motivated by a simple idea: because bots are made to accomplish specific goals, such as credential stuffing or content scraping, they interact with websites in distinct and difficult-to-disguise ways. Over time, the actions of a bot are likely to differ from those of a real user. Anomaly detection aims to model the characteristics of legitimate user traffic as a healthy baseline. Then, when automated bot traffic is set against this baseline, the bots appear as outlying anomalies that can be targeted for mitigation.

An anomaly detection approach is:

- Resilient against bots that try to circumvent protections by spoofing request metadata (e.g., user agents)

- Able to catch previously unseen bots without being explicitly trained against them.

So, how well does this work?

Today, Anomaly Detection processes more than Continue reading

Taking On DARPA’s FHE Security Challenge

Cybersecurity has never been easy. As the amount of business being done on the internet has grown, enterprises, smaller businesses, HPC institutions and other organizations have had to develop and embrace technologies designed to protect mission-critical data and applications from an increasingly sophisticated underworld of hackers, nation-states and other cyber-criminals. …

Taking On DARPA’s FHE Security Challenge was written by Jeffrey Burt at The Next Platform.

Tuning Up Nvidia’s AI Stack To Run On Virtual Infrastructure

Having to install a new kind of systems software stack and create applications is hard enough. …

Tuning Up Nvidia’s AI Stack To Run On Virtual Infrastructure was written by Jeffrey Burt at The Next Platform.

Video: Cisco SD-WAN Routing Design

After reviewing Cisco SD-WAN policies, it’s time to dig into the routing design. In this section, David Penaloza enumerated several possible topologies, types of transport, their advantages and drawbacks, considerations for tunnel count and regional presence, and what you should consider beforehand when designing the solution from the control plane’s perspective.

Video: Cisco SD-WAN Routing Design

After reviewing Cisco SD-WAN policies, it’s time to dig into the routing design. In this section, David Penaloza enumerated several possible topologies, types of transport, their advantages and drawbacks, considerations for tunnel count and regional presence, and what you should consider beforehand when designing the solution from the control plane’s perspective.

Advances Speed Time to Massive IoT Asset Tracking and Monitoring

Enterprises to benefit from COVID-19 driven distribution advances, cost reduction efforts, and energy efficiency options.How to Improve Employee Morale

We are going through a situation that is unprecedented and heart-breaking. There are so many emotions attached to the situation right now that if you started naming them one by one, it would take you a significant amount of time. The world is crumbling apart, and the worst part is that most people have to work to provide for their families. It doesn’t matter if they are working from home or the office, anxieties and stress are increasing and you can do nothing about it.

The work is increasing, the health situation is deteriorating, and there is too much uncertainty. Which is why companies, like ours, are working to promote ways in which you can help boost your employee’s morale.

When you ask the question, “How to Improve Employee Morale”, we make sure that we provide you with the exact results which can help you. You can also visit our website to see the many products available to you.

Here are just a couple of ways in which you can boost your employee’s morale.

Be Transparent

What your employees need more than anything in the world right now is transparency. They need to know what is going on and what Continue reading