Network Break 401: Google Teases Multi-Gig Home Broadband; New USB Cables Use Slightly Less Plastic

Today's Network Break covers three Google stories including Google Fiber's ambitions for multi-gig Internet and the killing of Stadia. We also discuss a rise in firewall sales, using plant-based materials in USB cables, and more IT news.Offensive Security & Threat Hunting: The Best Defense is a Good Offense

Offensive security is the future of cybersecurity as the proactive mindset enables threat hunters to be more efficient in detecting vulnerabilities.Monday Mobility Quick Thoughts

![]() I’m getting ready for Mobility Field Day 8 later this week and there’s been a lot of effort making sure we’re ready to go. That means I’ve spent lots of time thinking about event planning instead of writing. So I wanted to share some quick thoughts with you ahead of this week as well as WLPC Europe next week.

I’m getting ready for Mobility Field Day 8 later this week and there’s been a lot of effort making sure we’re ready to go. That means I’ve spent lots of time thinking about event planning instead of writing. So I wanted to share some quick thoughts with you ahead of this week as well as WLPC Europe next week.

- I remain convinced than half of the objections that are raised by oversight organizations when it comes to adopting new technology come from the fact they got caught flat-footed and weren’t ready for it to be popular. Whether it’s the Wi-Fi 6E safety issue or the report earlier this year from the FAA about 5G and airports it just seems like organizations spend less time doing actual investigation and more time writing press releases about how they are ready to figure it all out yet.

- I also remain cautiously optimistic that the new Apple devices rumored to be coming out later this year, namely the iPad Pro and MacBook Pro with M2 chips, will have Wi-Fi 6E support. Yes, the iPhone didn’t. It’s also a smaller device with less room to add new hardware. The iPad and MacBook have historically gotten Continue reading

Defending against future threats: Cloudflare goes post-quantum

There is an expiration date on the cryptography we use every day. It’s not easy to read, but somewhere between 15 or 40 years, a sufficiently powerful quantum computer is expected to be built that will be able to decrypt essentially any encrypted data on the Internet today.

Luckily, there is a solution: post-quantum (PQ) cryptography has been designed to be secure against the threat of quantum computers. Just three months ago, in July 2022, after a six-year worldwide competition, the US National Institute of Standards and Technology (NIST), known for AES and SHA2, announced which post-quantum cryptography they will standardize. NIST plans to publish the final standards in 2024, but we want to help drive early adoption of post-quantum cryptography.

Starting today, as a beta service, all websites and APIs served through Cloudflare support post-quantum hybrid key agreement. This is on by default1; no need for an opt-in. This means that if your browser/app supports it, the connection to our network is also secure against any future quantum computer.

We offer this post-quantum cryptography free of charge: we believe that post-quantum security should be the new baseline for the Internet.

Deploying post-quantum cryptography seems like a Continue reading

Introducing post-quantum Cloudflare Tunnel

Undoubtedly, one of the big themes in IT for the next decade will be the migration to post-quantum cryptography. From tech giants to small businesses: we will all have to make sure our hardware and software is updated so that our data is protected against the arrival of quantum computers. It seems far away, but it’s not a problem for later: any encrypted data captured today (not protected by post-quantum cryptography) can be broken by a sufficiently powerful quantum computer in the future.

Luckily we’re almost there: after a tremendous worldwide effort by the cryptographic community, we know what will be the gold standard of post-quantum cryptography for the next decades. Release date: somewhere in 2024. Hopefully, for most, the transition will be a simple software update then, but it will not be that simple for everyone: not all software is maintained, and it could well be that hardware needs an upgrade as well. Taking a step back, many companies don’t even have a full list of all software running on their network.

For Cloudflare Tunnel customers, this migration will be much simpler: introducing Post-Quantum Cloudflare Tunnel. In this blog post, first we give an overview of how Cloudflare Tunnel Continue reading

Automatic (secure) transmission: taking the pain out of origin connection security

In 2014, Cloudflare set out to encrypt the Internet by introducing Universal SSL. It made getting an SSL/TLS certificate free and easy at a time when doing so was neither free, nor easy. Overnight millions of websites had a secure connection between the user’s browser and Cloudflare.

But getting the connection encrypted from Cloudflare to the customer’s origin server was more complex. Since Cloudflare and all browsers supported SSL/TLS, the connection between the browser and Cloudflare could be instantly secured. But back in 2014 configuring an origin server with an SSL/TLS certificate was complex, expensive, and sometimes not even possible.

And so we relied on users to configure the best security level for their origin server. Later we added a service that detects and recommends the highest level of security for the connection between Cloudflare and the origin server. We also introduced free origin server certificates for customers who didn’t want to get a certificate elsewhere.

Today, we’re going even further. Cloudflare will shortly find the most secure connection possible to our customers’ origin servers and use it, automatically. Doing this correctly, at scale, while not breaking a customer’s service is very complicated. This blog post explains how we are Continue reading

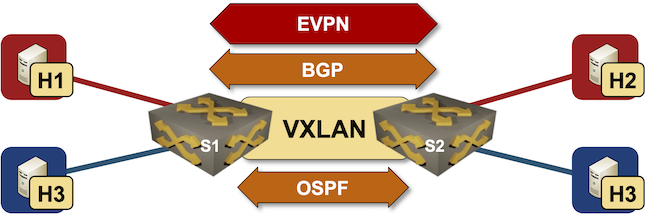

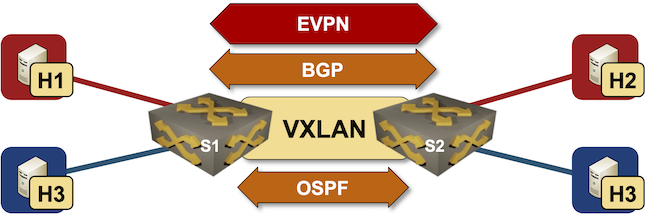

netlab EVPN/VXLAN Bridging Example

netlab release 1.3 introduced support for VXLAN transport with static ingress replication and EVPN control plane. Last week we replaced a VLAN trunk with VXLAN transport, now we’ll replace static ingress replication with EVPN control plane.

Lab topology

netlab EVPN/VXLAN Bridging Example

netlab release 1.3 introduced support for VXLAN transport with static ingress replication and EVPN control plane. Last week we replaced a VLAN trunk with VXLAN transport, now we’ll replace static ingress replication with EVPN control plane.

Lab topology

Extend SRIOV VFs to Containers

Please read following git wiki

https://github.com/kashif-nawaz/Extending-SRIOV-VFs-to-Containers

Worth Reading: QUIC Is Not a TCP Replacement

Bruce Davie makes an excellent point in his QUIC Is Not a TCP Replacement article – QUIC not a next-generation TCP, it’s a reliable RPC transport protocol.

What Bruce forgot to mention is that we had a production-grade RPC transport protocol for years – SCTP (Stream Control Transmission Protocol) – but it had two shortcomings:

- It wasn’t invented by the right people;

- It used a different IP protocol number and thus upset every ossified middlebox in the Internet. QUIC hides on top of UDP (because adding extra headers makes at least as much sense as junk DNA).

Worth Reading: QUIC Is Not a TCP Replacement

Bruce Davie makes an excellent point in his QUIC Is Not a TCP Replacement article – QUIC not a next-generation TCP, it’s a reliable RPC transport protocol.

What Bruce forgot to mention is that we had a production-grade RPC transport protocol for years – SCTP (Stream Control Transmission Protocol) – but it had two shortcomings:

- It wasn’t invented by the right people;

- It used a different IP protocol number and thus upset every ossified middlebox in the Internet. QUIC hides on top of UDP (because adding extra headers makes at least as much sense as junk DNA).

Dockerize Rails 7 App

In this post, I will show you how to Dockerize your Rails 7 app in a development environment. We will be using Tailwind for the CSS and PostgreSQL for the database. This setup includes hot reloading of assets on file changes which is super nice. Software used in this post Docker -...continue reading

Worth Reading: EVPN/VXLAN with FRR on Linux Hosts

Jeroen Van Bemmel created another interesting netlab topology: EVPN/VXLAN between SR Linux fabric and FRR on Linux hosts based on his work implementing VRFs, VXLAN, and EVPN on FRR in netlab release 1.3.1.

Bonus point: he also described how to do multi-vendor interoperability testing with netlab.

If only he wouldn’t be publishing his articles on a platform that’s almost as user-data-craving as Google.

Worth Reading: EVPN/VXLAN with FRR on Linux Hosts

Jeroen Van Bemmel created another interesting netlab topology: EVPN/VXLAN between SR Linux fabric and FRR on Linux hosts based on his work implementing VRFs, VXLAN, and EVPN on FRR in netlab release 1.3.1.

Bonus point: he also described how to do multi-vendor interoperability testing with netlab.

If only he wouldn’t be publishing his articles on a platform that’s almost as user-data-craving as Google.

Decrypting TLS Traffic with PolarProxy on Client PC

The tutorial provides detailed steps for decrypting HTTPS traffic generated on a client computer with PolarProxy installed. Decrypted traffic is then processed by Wireshark running together with PolarProxy on the same PC. HTTPS is an implementation of TLS encryption on top of the HTTP protocol, which is used by all websites as well as some other web […]Continue reading...

Decrypting TLS Traffic with PolarProxy on Client PC

The tutorial provides detailed steps for decrypting HTTPS traffic generated on a client computer with […]

The post Decrypting TLS Traffic with PolarProxy on Client PC first appeared on Brezular's Blog.

s going to be really interesting to see if Comcast, Charter, and the other big cable companies raise prices later this year. The industry has changed, and it doesnâ€

s going to be really interesting to see if Comcast, Charter, and the other big cable companies raise prices later this year. The industry has changed, and it doesnâ€