iCloud Private Relay: information for Cloudflare customers

iCloud Private Relay is a new Internet privacy service from Apple that allows users with iOS 15, iPadOS 15, or macOS Monterey on their devices and an iCloud+ subscription, to connect to the Internet and browse with Safari in a more secure and private way. Cloudflare is proud to work with Apple to operate portions of Private Relay infrastructure.

In this post, we’ll explain how website operators can ensure the best possible experience for end users using iCloud Private Relay. Additional material is available from Apple, including “Set up iCloud Private Relay on all your devices”, and “Prepare Your Network or Web Server for iCloud Private Relay” which covers network operator scenarios in detail.

How browsing works using iCloud Private Relay

The design of the iCloud Private Relay system ensures that no single party handling user data has complete information on both who the user is and what they are trying to access.

To do this, Private Relay uses modern encryption and transport mechanisms to relay traffic from user devices through Apple and partner infrastructure before sending traffic to the destination website.

Here’s a diagram depicting what connection metadata is available to who when not using Private Relay Continue reading

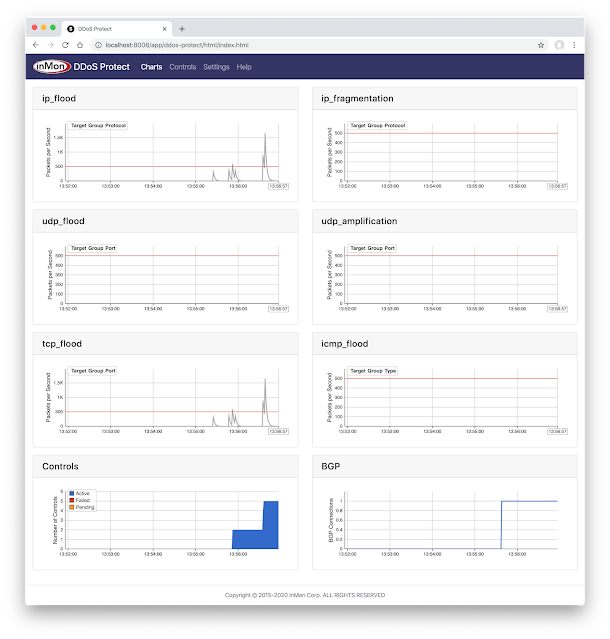

There are various ways I can defend against this, but one (sorta ugly) option (I don’t actually recommend, read to the bottom to see my logic) is to create a blackhole aka a null route.

There are various ways I can defend against this, but one (sorta ugly) option (I don’t actually recommend, read to the bottom to see my logic) is to create a blackhole aka a null route.