Experimenting TCP Congestion Control(WIP)

Introduction

I have always found TCP congestion control algorithms fascinating, and at the same time, I know very little about them. So once in a while, I will spend some time with the hope of gaining some new insights. This blog post will share some of my experiments with various TCP congestion control algorithms. We will start with TCP Reno, then look at Cubic and ends with BBR.I am using Linux network namespaces to emulate topology for experimentation, making it easier to run than setting up a physical test bed.

TCP Reno

For many years, the main algorithm of congestion control was TCP Reno. The goal of congestion control is to determine

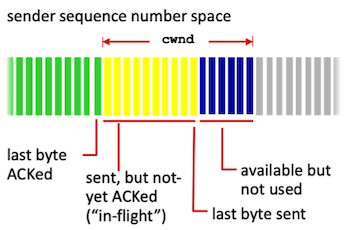

how much capacity is available in the network, so that source knows how

many packets it can safely have in transit (Inflight). Once a source has these packets in transit, it uses the ACK’s

arrival as a signal that packets are leaving the network, and therefore it’s safe to send more packets into the network.

By using ACKs for pacing the transmission of packets, TCP is self-clocking. The number of packets which

TCP can inject into the network is controlled by Congestion Window(cwnd).

Congestion Window:

Hedge 145: Roundtable on Professional Liability

The software world is known for overdue projects, costs overrun, lots of defects, and lots of failure all the way around. Many other engineering fields have stricter requirements to take on projects and liability insurance driving correct practice and care. The networking world, and the larger IT world, however, has neither of these things. Does this make IT folks less likely to “do the right thing,” or is the self-regulation we have today enough? Join Tom Ammon, Eyvonne Sharp, and Russ White as they discuss the possibilities of professional liability in information technology.

Know Your Adversaries: The Top Network Bad Actors

The bad guys are out to steal your data, identity, money, and anything else they can lay their digital hands on. Here's a rundown of today's major adversaries.Collecting and Processing Data from Pokec using Python

Recently I have programmed Python scripts - config.py and pokec_get_stats.py, which I can use to collect statistics about the users in a particular room of the popular Slovak social network Pokec. My goal is to practice the Python programming and test the functionality of Selenium WebDriver. The Selenium WebDriver is used to emulate user interaction […]Continue reading...

Collecting and Processing Data from Pokec using Python

Recently I have programmed Python scripts - config.py and pokec_get_stats.py, which I can use to […]

The post Collecting and Processing Data from Pokec using Python first appeared on Brezular's Blog.

Kubernetes Unpacked 008: Go – The Language Of Kubernetes

In this episode, Michael catches up with Josh Duffney, Cloud Developer Advocate at Microsoft to talk about Go (golang). Kubernetes, Docker, and Terraform are all written in Go. Josh and Michael talk about their journey into Kubernetes and Go, some fun projects to play with, how to learn Go, and why understanding certain programming languages is crucial for breaking into Kubernetes.

The post Kubernetes Unpacked 008: Go – The Language Of Kubernetes appeared first on Packet Pushers.